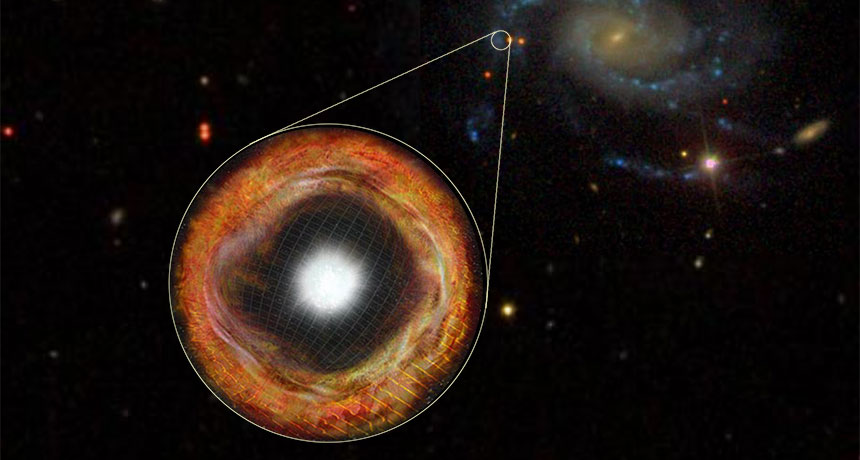

Supernova spotted shortly after explosion

Astronomers have caught a star exploding just hours after light from the eruption first reached Earth. Measurements of the blast’s light suggest that the star rapidly belched gas in the run-up to its demise. That would be surprising — most scientists think the first outward sign of a supernova is the explosion itself.

“Several years ago, to catch a supernova early would mean to detect it at several days, a week, or maybe more, after the explosion,” says astrophysicist Ofer Yaron of the Weizmann Institute of Science in Rehovot, Israel. Now, he says, “we talk about day one.” Although previous supernovas have been seen this early, the new observation is the earliest one with a spectrum — an accounting of the emitted light broken up by wavelength — taken six hours after the explosion, Yaron and colleagues report online February 13 in Nature Physics.

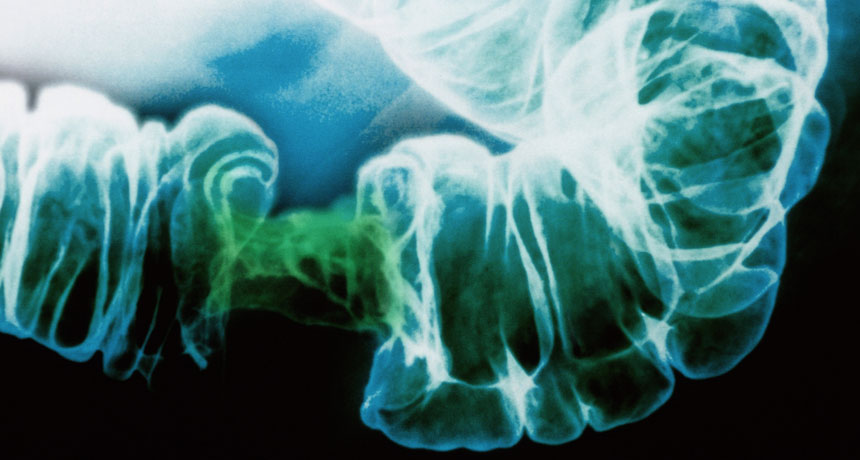

Astronomers observed the explosion — a type 2 supernova, triggered by the collapse of a dying star (SN: 2/18/17, p.24) — with the Intermediate Palomar Transient Factory, which surveys the sky on a regular basis using a telescope at the Palomar Observatory, near San Diego. The supernova appeared on October 6, 2013, in the galaxy NGC7610, 166 million light-years from Earth in the constellation Pegasus.

Spectra taken at several intervals after the explosion painted a picture of the aftermath. A shock wave from the supernova plowed through gas surrounding the star, stripping electrons from atoms, which later recombined, emitting certain wavelengths of light in the process. Those wavelengths showed up in the spectra, allowing scientists to deduce what had occurred. The gas had been emitted just before the explosion — within the previous year or so — they concluded.

“This is actually very exciting if you ask me,” says astrophysicist Matteo Cantiello of the Center for Computational Astrophysics in New York City, who was not involved with the research. For typical stars on the brink of collapse, he says, “this is the first clear evidence that … the last period of their lives is not quiet.” Instead, dying stars may become unstable, rapidly spurting out material.

“That’s very, very odd,” says astrophysicist Peter Garnavich of the University of Notre Dame in Indiana. Scientists typically assume that the outer layers of such stars are detached from the internal processes which trigger the collapse, Garnavich says. How an oncoming collapse could provoke eruptions preceding the explosion is unknown.